Building a macOS app for AI-powered script creation and voiceover recording

Remember my quest to create Mandarin lessons for beginners and record them with AI? I wanted to use a OpenAI's GPT-4 model to generate the lessons and then record them with AI voices from OpenAI and ElevenLabs. How hard could it be? Spoiler alert: It was actually pretty effort-intensive.

Looking for a shortcut

I started by checking the GPT Store to see if this functionality already existed in the form of a GPT calling out to a TTS model through an API. I wasn't optimistic because most API calls cost money, and the publicly available GPTs in the store cannot yet be monetized. But, I thought, perhaps some brand was offering free TTS capabilities to generate interest and leads.

Probably the best such option I found uses a model from ElevenLabs, but it limits recordings to 1,500 characters and ends with a promotion for, quite understandably, ElevenLabs.

Creating a schema for OpenAI's TTS model

Next, I tried creating a GPT Action to connect a custom GPT to OpenAI's TTS API. I began by using ActionsGPT to create a schema based on the API documentation, and then worked on troubleshooting both the schema and the overall experience. But the API timed out frequently, which made evaluating and debugging the experience prohibitively time consuming.

Building a MacOS app

Finally, I chose to build a dedicated macOS app with SwiftUI and Xcode to run on my laptop. I would be able to dial-in the functionality and take as much time as I needed to refine scripts and recordings, the only limit being my $15 monthly budget for API calls. Starting with the SwiftOpenAI package, ElevenLab's API guide, and a custom GPT with access to its documentation, I quickly hacked out and debugged a rough app.

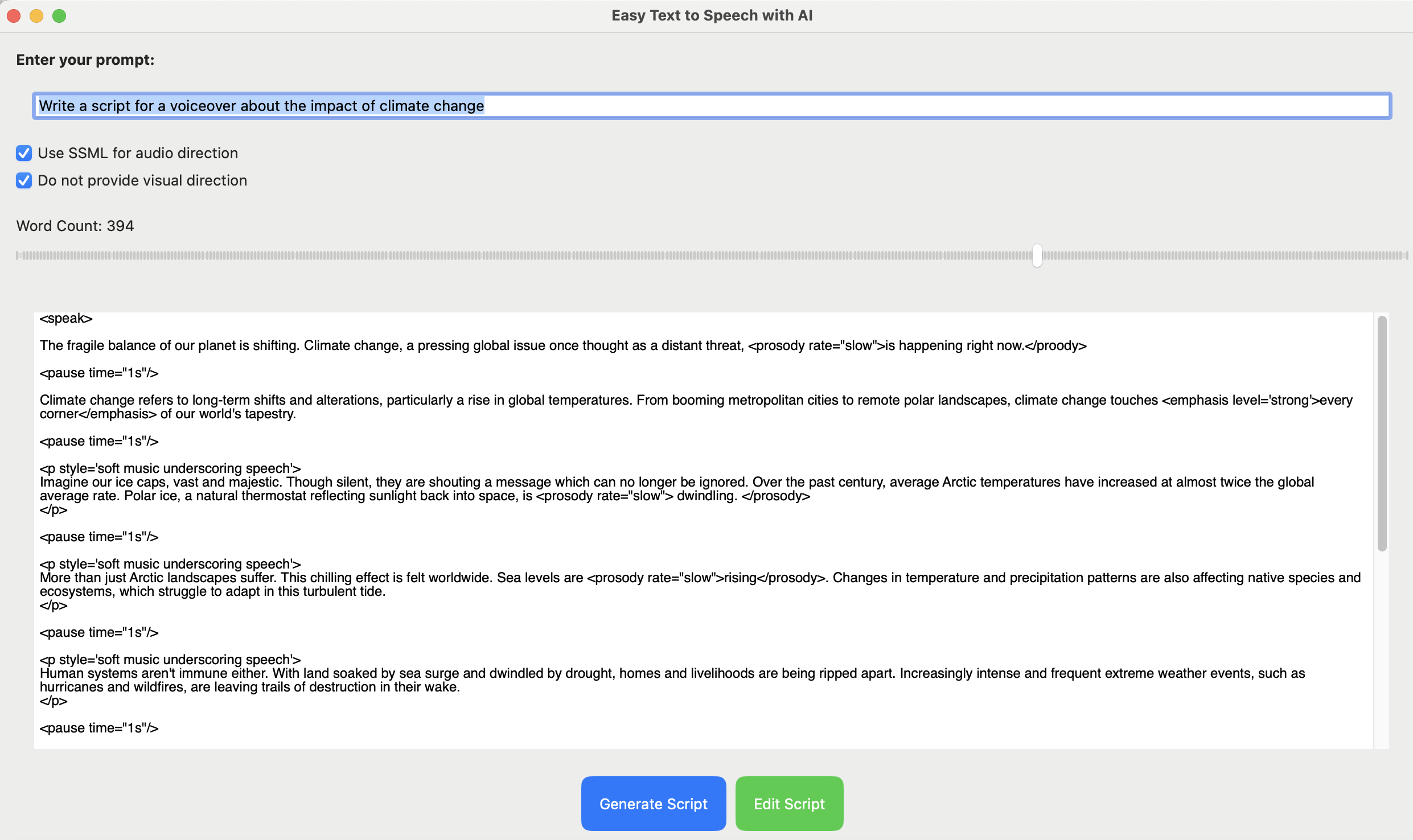

The working prototype, while perhaps being a bit rough around the edges, offered these features:

- Script generation with prompts: The app generates scripts via OpenAI's GPT-4 model based on user input.

- Prompt customization: Users can specify word count and whether SSML or audio direction should be provided.

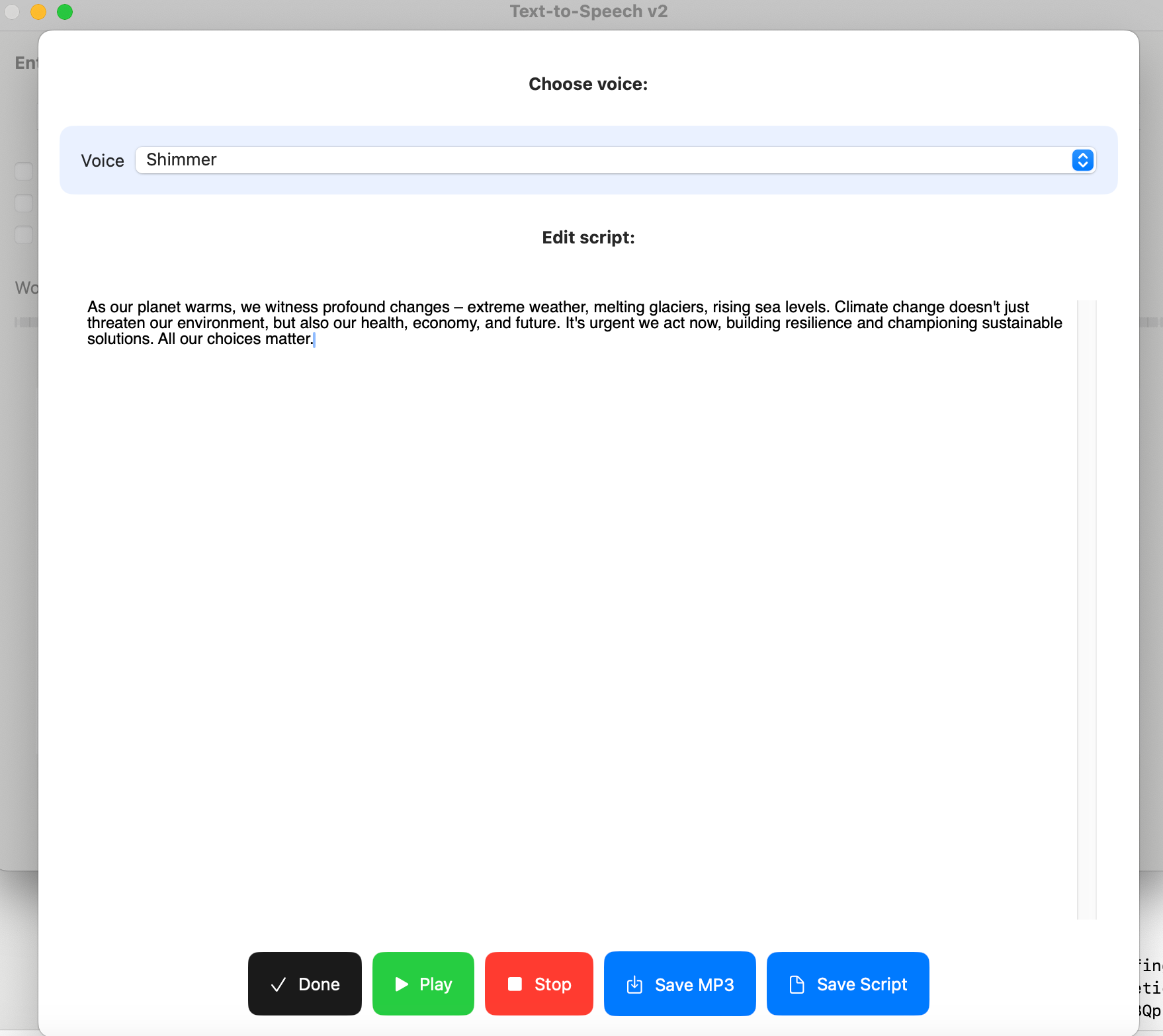

- Script editing, audio playback, and export to MP3: Users can edit generated scripts, play the audio output using voices from OpenAI and ElevenLabs, and download both the script (text) and audio (MP3). The audio player support play, pause, and stop functions.

Overall, it provides enough of what I was looking for that I should now be able to easily create and record language lessons plus anything else that comes to mind.

The code and some disclaimers

If you have a MacBook with an up-to-date OS and you'd like to give this a try, you can download the code from GitHub. If you want to give it a shot, here are a few quick tips and reminders:

You will need API codes from OpenAI and Eleven Labs. The README file on GitHub explains how to do this. OpenAI typically provides some free API credits for new users, and ElevenLabs offers free API codes for testing purposes.

Be careful with your API keys. Don't ever hard code API keys into an app you publish or otherwise share, as it's a great way to get your key stolen. Read about best practices for obfuscating API keys.

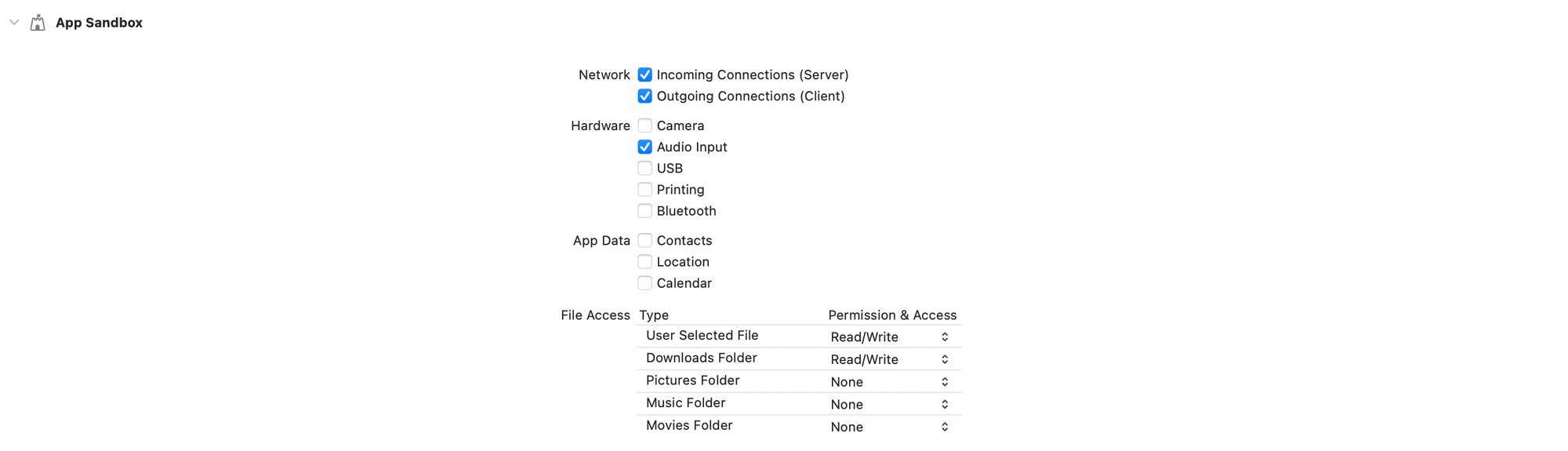

Give your project the correct network, audio, and file management permissions. These can typically be found in Xcode's project settings.

Add the SwiftOpenAI package to your project. Set up this package dependency before you start working with the code.

Remember that OpenAi's usage policies require identifying recordings that are produced by AI. You can do this with a quick blurb in the intro or outro.

Bugs, limitations, and next steps

Since I'm fairly new to SwiftUI and used GPT-4 extensively as a co-pilot, the code likely contains some outdated syntax and inefficiencies. Also, the UI is very basic and not especially attractive. But I'm generally happy with the app, and I'm planning to evolve it further.

The experiment continues...