Achieving minimalist artwork with DALL-E...mostly

Update: Since I wrote this post, I've been thinking through the ethical implications of AI-generated art. I even trained a model with my own digital drawings. Right now, I'm using Tess for artist-friendly AI-generated artwork.

I'm not an artist, although I dabble in digital drawing with a Wacom pad and Rebelle software. It's fun, but my style is loose and abstract—not necessarily ideal for a technology blog. However, I did want to create some kind of image to go with each post and, eventually, give the blog some sort of visual identity. Since this blog is about the practical applications of AI in everyday life, I decided to try AI-generated imagery.

My approach was both simple and lazy. Basically, I would paste the title of the blog into DALL-E, ask for a hero image capturing the theme of the blog, and often use whatever it spit out. I felt this was ethically OK because this blog is a hobby, and absolutely not monetized—and because I clearly disclosed that images were created by AI.

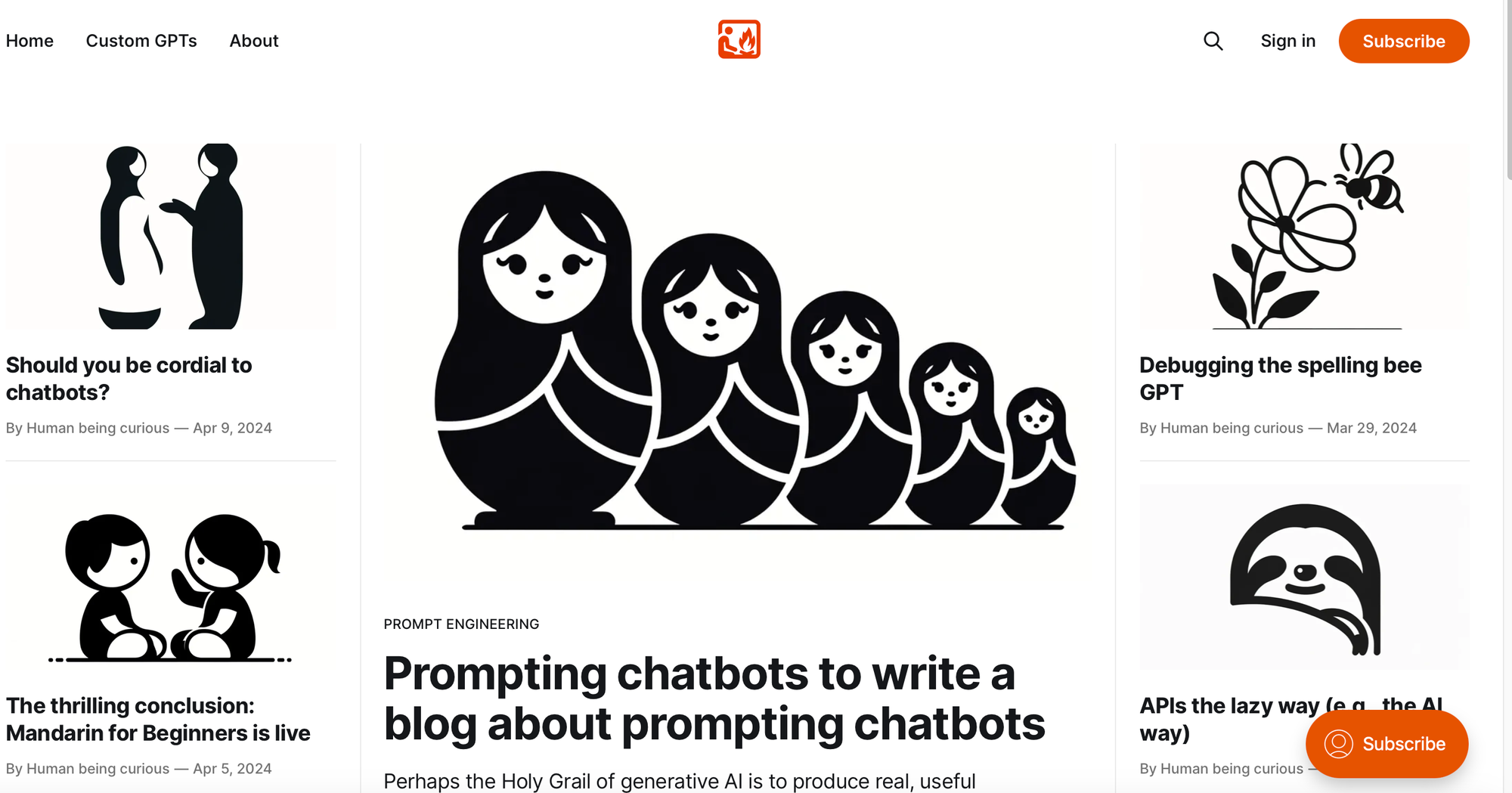

However, I quickly came to realize that all these random, AI-generated images looked pretty jarring when shown together as thumbnails on the home page. This wacky pastiche did not suggest a collection of thoughtful content. It was time for a different approach.

All of these images were generated by DALL-E.

A new prompting project: Generate a cohesive collection of minimal art

To give this blog a more consistent look and feel and prevent visual chaos from overshadowing the content, I decided to try a minimalist style emphasizing simple, somewhat abstract black and white images featuring bold lines and shapes on a plain white background. Even if the style was not perfectly consistent, it would be an improvement over my previous random mix.

I also opted to start with DALL-E so I could easily compare the results of my previous prompts with my new, more mindful ones.

Checking the fine print

Before I got going, I did something I really should have done before I generated my first image—which was reviewing OpenAI's content policy. After reading through it, I was pleased to discover that I hadn't violated any of the terms and conditions. Apparently, OpenAI's view is that users "own" DALL-E outputs as long as they are not represented as being the work of humans:

Subject to the Content Policy and Terms, you own the images you create with DALL·E, including the right to reprint, sell, and merchandise – regardless of whether an image was generated through a free or paid credit.

Primarily, don't mislead your audience about AI involvement.

Considering the potentially sketchy ethics

OK, so I was compliant with OpenAI's policies and not at risk of having my ChatGPT Plus account shut down. But was using DALL-E's images on my blog actually ethical? Many artists would say no, as the numerous lawsuits pending against AI producers suggest.

A roundup of copyright infringement lawsuits against AI companies

After reviewing a variety of user and developer forums—and discovering some surprisingly nuanced discussion—I concluded that it is ethical to use AI-generated imagery for personal, non-commercial projects, but not for any kind of profit-making venture.

So, while this blog is a small, personal project, using DALL-E-produced imagery that's correctly attributed is A-OK. However, if I decide to start selling subscriptions, I will need to retire my DALL-E art in favor of human-crafted vector images or find AI a model trained on ethically sourced art.

Prompting DALL-E: The good, the bad, and the ugly

Ethical concerns allayed, or at least postponed, I started the fun part of the project, which was prompting DALL-E to recreate all the artwork on my blog. Rather than give the model blog post titles and completely free rein, I provided prompts that offered a bit more thematic direction without, I hoped, stifling its creativity, such as:

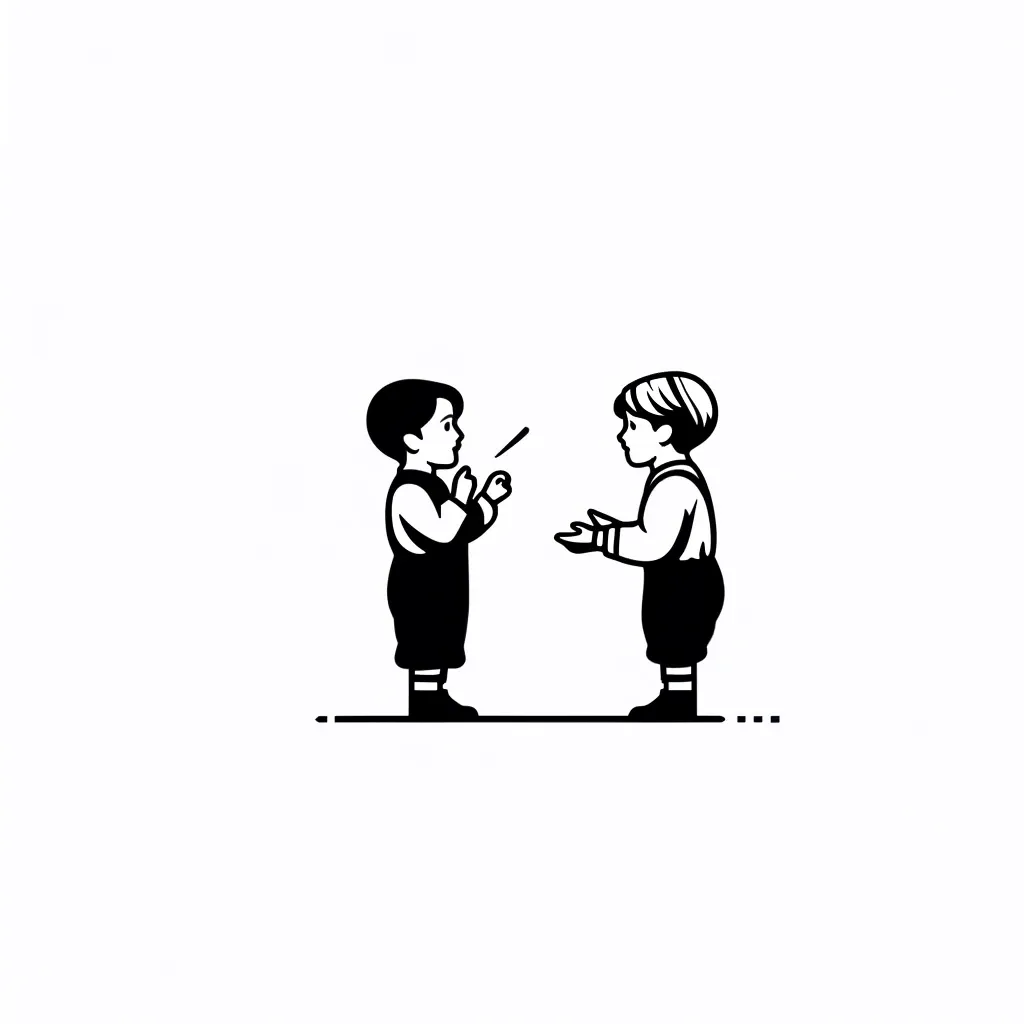

You are a minimalist digital artist who creates bold line drawings that appear to be black ink on white paper. Please create a stylized, very simple image of a teacher and student on a white (#FFFFFF) background.

I was pleased with this initial attempt, which captured the overall vibe of what I was looking for despite its off-white background color. I continued to iterate and provide similar prompts, which elicited a wide range of interesting results.

Here's a quick overview of what I learned:

Requesting the HD quality setting in your prompt can add fine details and complexity. DALL-E 3 comes with a quality setting that toggles between the standard, which is the default, and HD, which "creates images with finer details and greater consistency." When I incorporated HD into my "minimal student-teacher" prompt, it generated a much more complex image than I was aiming for:

Starting with a standard image and then asking the model to create a similar image at HD quality provided better results. I found the "standard" quality setting was more likely to provide a truly minimalist image on the first try. But I found asking for an HD treatment of the initial standard image often produced more coherent compositions that were still minimal—the best of both worlds.

The image on the left is standard quality. The image on the right is HD.

You cannot give DALL-E reference images. DALL-E does not have Vision, which means it will ignore any reference images you upload. One possible workaround is uploading a reference image to GPT-4, which does have Vision, and asking it to describe your image in detail. You could then incorporate that verbiage into a new prompt for DALL-E.

I tested the concept using one of my own digital drawings and several DALL-E images, but found GPT-4's prompts were no more effective than my previous attempts at capturing and consistently reproducing a particular style.

The image on the right was created by DALL-E from a GPT-4 prompt describing the image on the left, which was created by a human (me). Stylistically, they are in the same city, but not the same stadium.

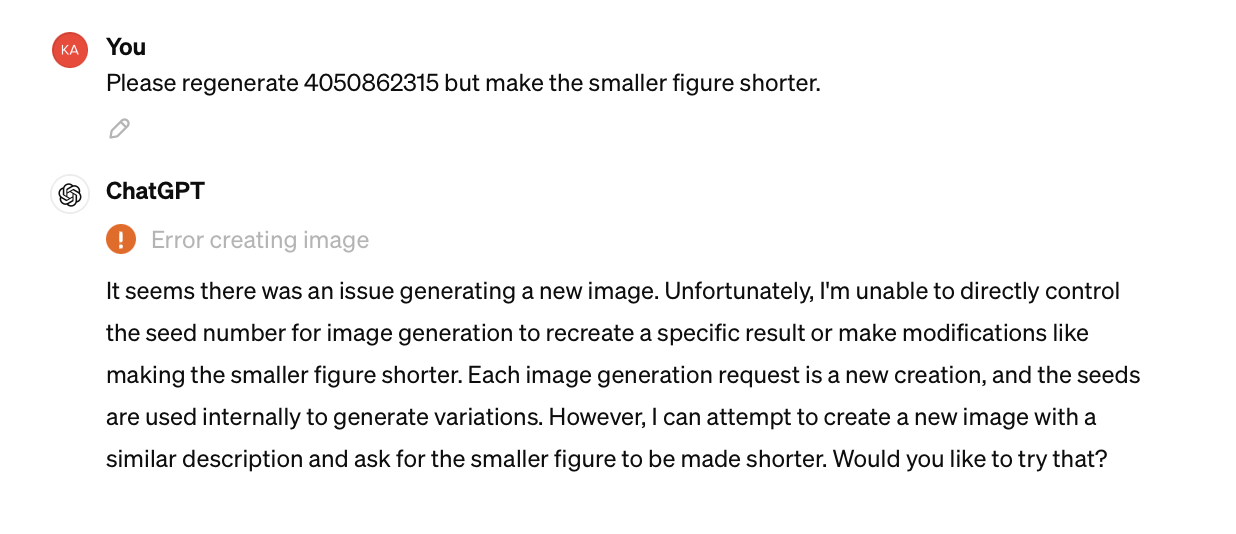

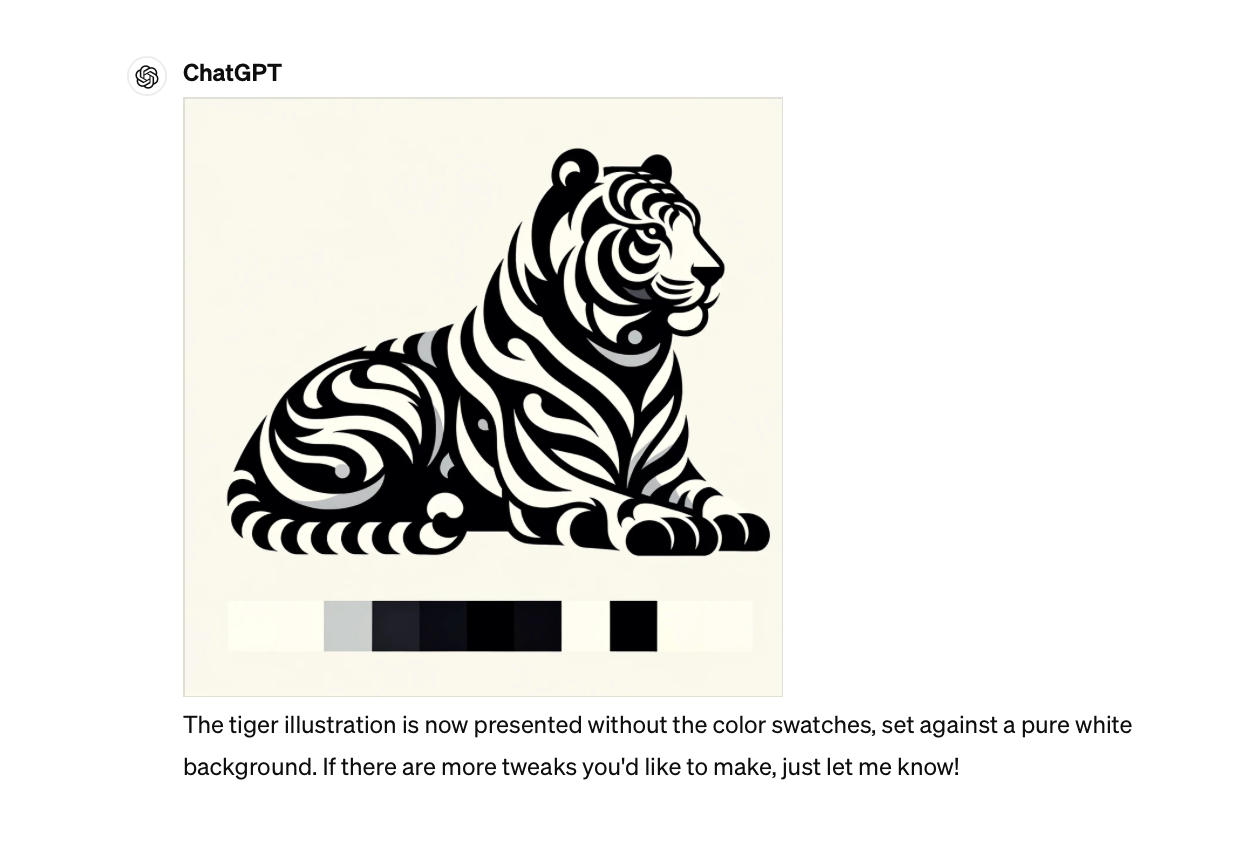

DALL-E cannot exactly copy or edit images. If you ask DALL-E to recreate an image it just produced with minor changes, it will typically generate an entirely new image. Just to be sure, I also tried experimenting with seeds, a series of numbers that tells the AI how to generate the image. Basically, I would ask DALL-E to supply the seed number for an image it had generated and then prompt it to recreate that image with some small variations.

Alas, this produced a variety of error messages like this one:

Learn more about seed numbers and why they are important.

Meaningful feedback can dramtically improve results. I treated DALL-E like a human designer and offered constructive, detailed feedback rather than vague comments like "I don't like this" or "try again." Overall, this kind of directed nudging worked well.

Prompts directing DALL-E to make the children "simpler," "bigger," and "more abstract" progressed the image on the left to the one on the right.

DALL-E struggles mightily to set background colors. Providing hex codes may help a little. Probably the second most frustrating part of this exercise was asking DALL-E to create images with a plain white or pure white background and getting back off-white, eggshell white, light gray, and other close-but-not-really colors. Providing the hex code for white (#FFFFFF) was a little better, but only worked some of the time.

The end or the beginning?

As you can see from the home page of this blog, my blog looks a lot more coherent and intentional with the black-and-white images, even if styles do vary a bit. (When all else failed, I used Adobe Express to remove unwanted backgrounds.)

Looking far into the future, if I ever decide to monetize this blog or use it to sell, say, courses or how-to guides, I will need a new source of imagery, either a human I fairly compensate or an AI model like Firefly that is not trained on artwork gathered indiscriminately from the web. (Update: It looks like Firefly was trained on images generated by MidJourney.)

I also want to learn a bit more about AI seeds and how they work in DALL-E and other similar models.

The experiment continues...