APIs the lazy way

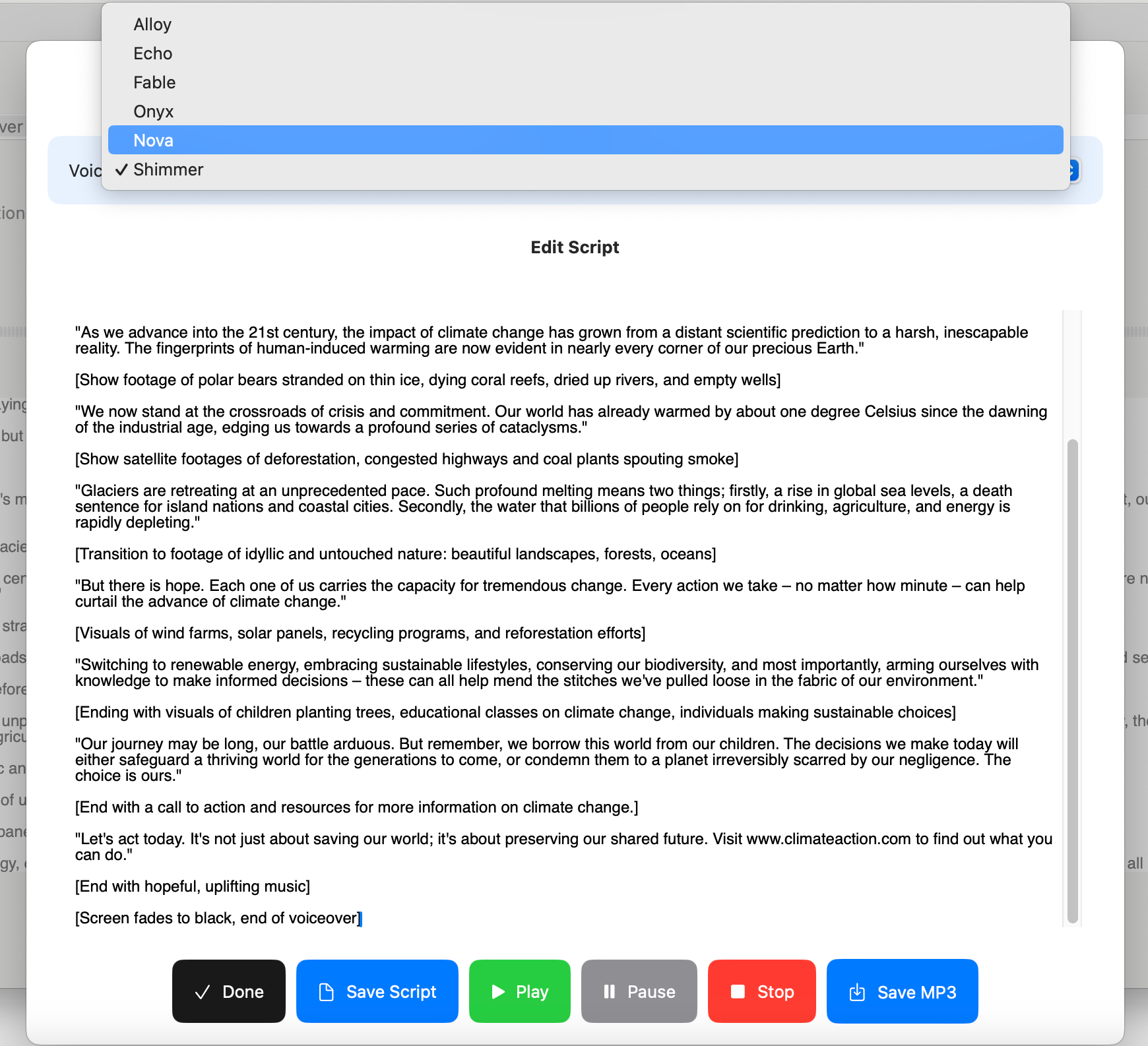

I'm still tinkering with my voiceover scripting and recording app. I've made some decent progress, and now I can easily choose from all of OpenAi's six voices when playing audio or saving it an MP3 file. I also took a shortcut by using AI to review OpenAI's API reference docs.

Let your AI read the API

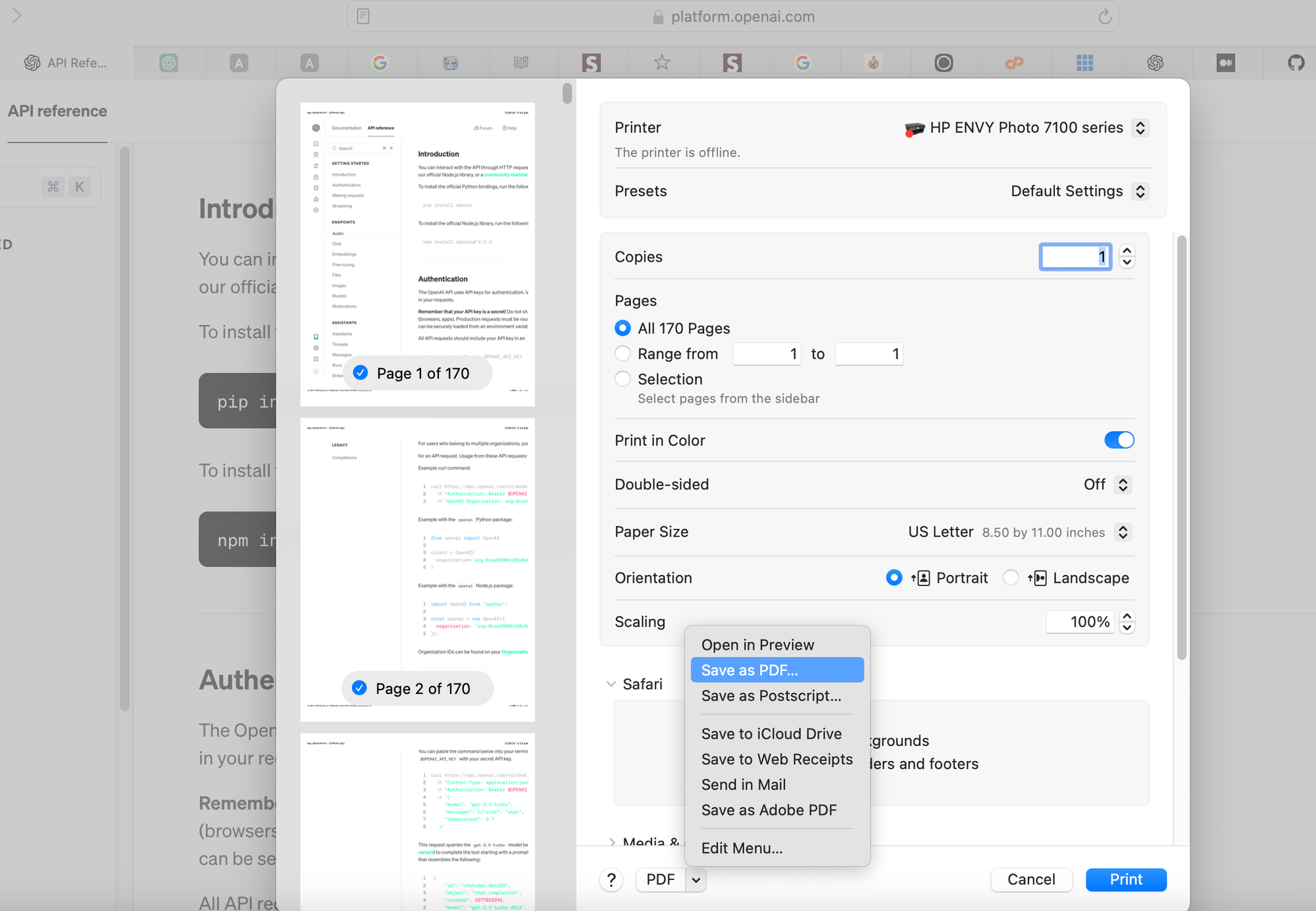

I updated my custom SwiftUI-coding GPT, which already knows the SwiftOpenAI package, with OpenAI's API reference document saved as a 165-page PDF.

After this step, I successfully prompted the AI to suggest the SwiftUI code updates necessary for my simple voice picker. I suspect I could use the same approach if I decide to swap out OpenAI's TTC model for, say, ElevenLabs or another service.

Over the coming days and weeks, I will continue to refine this app when time allows. The UI needs a ton of work, but progress is steady.

The experiment continues...