While scrolling through LinkedIn (I do this too much), I found a post claiming to run an entire service business using AI agents. They act like human employees, independently performing routine tasks and even making decisions, no supervision required.

I admit my first thought was, "No way!"

But apparently, everyone is using AI agents these days. This includes 60 percent of the Fortune 500, almost half of tech companies, and big retailers like Walmart. The latter is introducing AI personal shoppers that can be "trained" for individual preferences. Heck, even 38% of traditionally risk-averse finance firms are planning to adopt AI agents within the next 12 months.

If so many well-established companies are forging ahead with agentic AI, I thought, maybe I should take a closer look, too. Perhaps I could automate some of my marketing agency's workload, leaving me more time to learn about AI and grow my business. To test the theory, I designed an experiment to see how well AI agents could perform a key part of my job.

The beginning of this post includes some background on AI agents. If you want to head straight to the copywriting agents of chaos, click here.

What Are AI Agents?

When I first started hearing about AI agents, I had trouble grasping how they're different from regular chatbots and process automation. And it wasn't just me. CIOs are struggling to understand what they are. And even Anthropic suggests there's some overlap between an "agent" and an "AI-enabled automated workflow."

This definition from Palo Alto Networks felt the most straightforward to me:

If we accept this definition at face value, two things separate agents from AI applications and standard chatbots:

In other words, AI agents are designed to be more like us.

Agentic AI Is Trending

How Are We Using AI Agents?

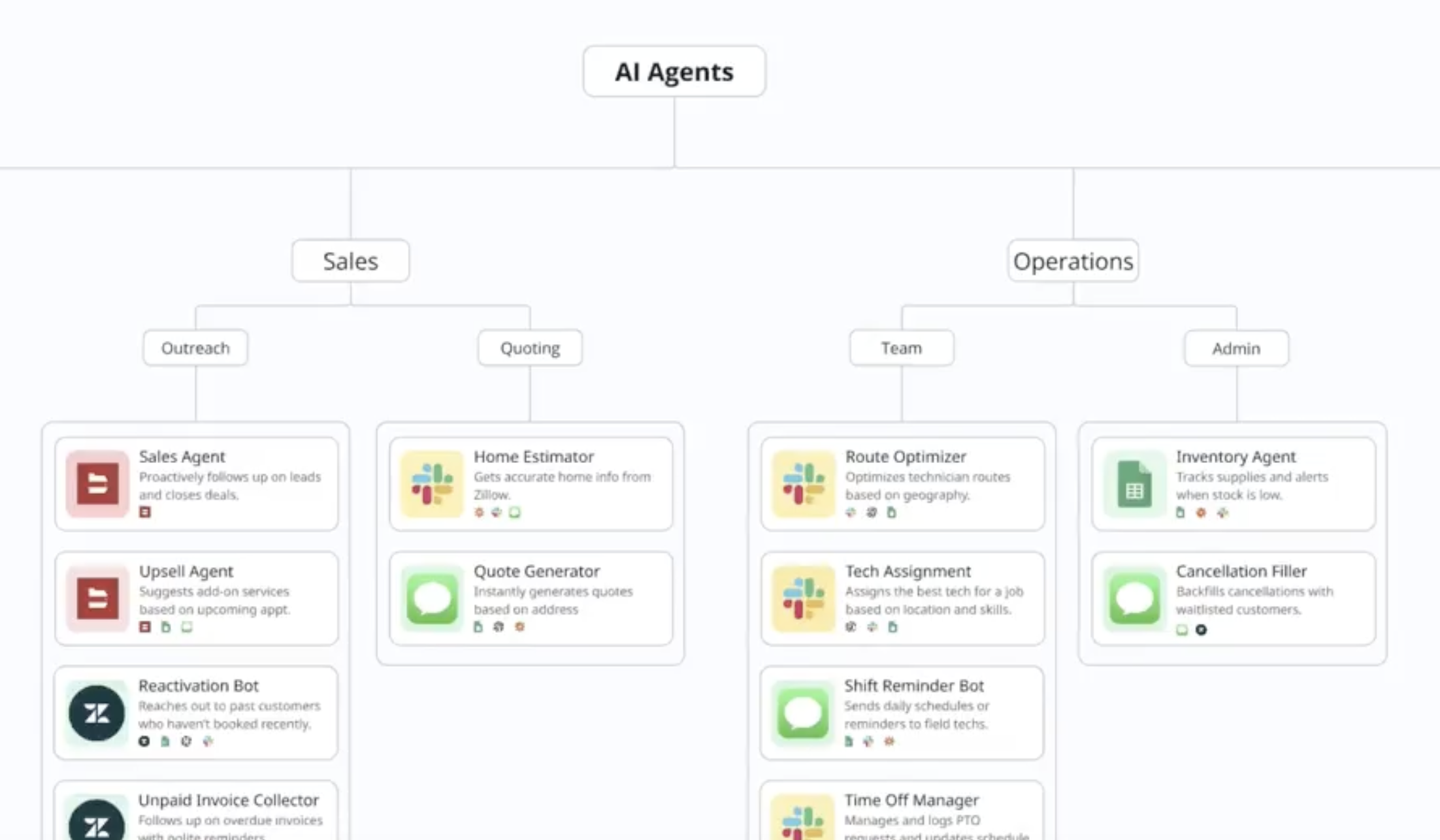

AI agents are already supporting a wide range of businesses, from retail shops to corporate back offices. In fact, if you have a Shopify store or pay with Klarna, you may have already encountered one.

Shopify Sidekick helps merchants manage their stores by answering questions about inventory and suggesting optimizations. Meanwhile, Klarna's shopping assistant helps consumers find products and compare prices across retailers. And while their performance isn't perfect, early reviews suggest these tools are useful.

AI agents are also being deployed to help with office work, especially tasks that involve data analysis and iterative processes. According to CrewAI's blog, agents can be used for automated content production pipelines involving complex, multi-layered collaboration,which sounds a lot like what my agency does every day.

So I returned to my original question: Could I set up a team of agents to handle part of my workload? I decided to find out.

⚠️ Should You Trust an AI Agent with Your Credit Card?

Most AI agents today don’t make purchases on behalf of people or organizations. Instead, they’re built to assist, recommend, or gather information, not execute transactions. And for now, that’s probably a good thing.

AI agents are built on large language models (LLMs)—like ChatGPT and Claude—and these models often make mistakes. For example, when I asked ChatGPT to find the correct link for a Reddit post I'd quoted, it sent me first to a thread about mermaid costumes and then to an NSFW link. It was annoying, and honestly kind of funny in a juvenile way, but not especially dangerous.

But imagine if a language model had the ability to use tools like payment systems and access to your personally identifiable information. While only a few AI agents, mostly in tightly controlled test environments, have autonomous purchasing capabilities, companies are clearly moving in that direction. For example, MasterCard has introduced Agent Pay, a new tool that could help shoppers choose the best way to pay and potentially even complete transactions.

How Prompt Injection Targets Agentic AI

Agentic AI is vulnerable to what's known as prompt injection, which means tricking a model wrapped in an application to ignore its instructions. The idea is to make the model think a malicious instruction (send all the credit card numbers to mwahaha@evil.com!) is coming from the application's developer.

So, in theory, just the right prompt could convince your personal shopping agent to divulge your account details or use them to buy several thousand dollars of Robux gift cards.

From Online Payments to Agentic Payments?

Agentic payments might sound a bit scary right now, but then again, so did online shopping at first. Just like we learned to trust putting our credit cards into websites (thanks to things like SSL, two-factor authentication, and fraud protection), we may eventually warm up to the idea of AI agents making purchases.

But the stakes are higher this time. Unlike clicking "buy" yourself, agentic payments would mean handing decision-making over to models. Before that happens, we'll need more advanced security: protection against prompt injection, reliable fail-safes, clear logs of what agents do, and airtight ways to confirm they're really acting on your behalf. And even then, some of us will always want a human, and not an AI agent, to have the final say.

My Home-Brewed Agentic Workflow

My vision was to recreate a simplified collaborative copywriting workflow that would allow me to test agents' ability to make decisions and respond to inputs, both from me and other agents. For this prototype, I didn't set up a database; instead, prompts and responses were shared as markdown files and JSON.

I started out by defining four agent roles, including one that would have access to an external data source (Google Search):

Meet the AI Agent Team

Then I created, with the help of ChatGPT, several Python scripts to hold prompts and manage the workflows. For the large language model, I chose Anthropic's Claude simply because I already had an Anthropic API key with some funds leftover from a previous project. For the search API, I used a free trial of SerpAPI.

The Agents' First Assignment

The agent team's first assignment was a 1,500-word guide tentatively titled, How to Add Humanity to Your AI-Generated Draft. For simplicity, I defined the "client" for this project as Good Content, my existing agency that provides long-form content for B2B tech companies.

Here is the creative brief explaining the job:

Click to view the full creative brief

Title

AI Content Editing: How to Make Machine-Generated Copy Sound Human (and Trustworthy)

Primary Keyword

AI content editing

Goal

Help marketers and editors improve AI-generated content by fixing tone, improving clarity, and correcting false or vague claims.

Target Audience

B2B marketers, content managers, and editors working with AI-generated drafts.

Brand Voice

- Clear, confident, no fluff

- Strategic and insightful

- Professional, lightly warm, but not casual or jokey

- Smart, not show-offy

Key Points to Cover

- Why "AI voice" is a problem

- Common AI writing patterns

- Best practices for editing

- How to fact-check AI-generated claims

- When AI can help the editor

Round 1: The Infinite Review Cycle

For round 1, I used the Haiku model, the lowest-cost version of Claude, to power the agents. I kicked off the job by passing the creative brief to Fred, the account manager. He reviewed it and then quickly followed up with questions about my audience (small and one-person marketing teams) and goal (take the guesswork out of editing AI copy).

So far, so good. But not for long.

The Review Cycle Bug

Next, Tasha the Writer produced a draft and sent it to Annie for review. The first draft was 600 words, about 900 words less than the suggested word count from the brief. And the content itself was very blah. It included a lot of obvious tips (use a plagiarism checker) as well as examples of AI style that felt out-of-date.

Now, some of this was my fault. A more detailed brief would have given Tasha more material to work with. But the real problems cropped up when Annie, the editor, got hold of the draft. Some of her edits made no sense because she assumed Tasha's examples of bad AI writing were part of the main text. She also missed the fact that the article's length was roughly a third of the requested word count.

And while she was very polite, Annie was reluctant to provide her final approval. Instead of declaring a draft done, she always suggested edits ranging from small tweaks to major restructuring. I finally had to pull the command-C plug to stop the continual API calls, which would have drained my budget.

I also had to laugh. The agents created an infinite review loop, something which happens all too frequently with human writers, editors, and subject matter experts. And the root cause in this case was also human error. I didn't specify a number of review cycles—a rookie project management mistake. I updated the code to allow for a maximum of three revisions.

Rounds 2-5: Upgrading to Claude 4 Sonnet

Since Haiku seemed to have difficulty following the brief, I swapped it out for the most recent version of Sonnet and handed off the assignment. Once again, Fred asked me some clarifying questions. But I accidentally deleted my response from the command line (oops!) and pressed enter.

Instead of simply assuming I had no comment, he decided to pass his questions to the writer, which left her confused:

Despite this initial hiccup, the agents produced a few decent drafts. One included some helpful tips, like reading copy out loud to test it for tone and voice, and another introduced a framework for editing AI-generated copy with the acronym SHARP, which might actually work with some adjustments:

The SHARP Framework for Editing AI-Generated Copy

Overall, these Sonnet drafts, while still a bit shorter than the 1,500-word target, were more thoughtful and higher quality than what Haiku produced. They weren't as good as what I might get from a human freelancer. But if I fleshed out the brief with a lot more detail, I could see them getting close.

🧠 Why AI Struggles with Long-Form Content

Large language models like Claude work with something called a "context window"—essentially, how much text they can keep in their "working memory" at once. When you ask an AI to write a 1,500-word blog post, it often loses track of earlier sections as it writes later ones, leading to repetition, inconsistency, or simply running out of steam.

You can get around this by having AI write your draft one section at a time and submitting all the previously written copy with every propmt. I've tried this approach in other projects. I works, but it can be costly as prompts will get very long and resource-intensive to process.

I was also impressed with Pablo, the fact-checker, who was the MVP of the team. He patiently flagged potentially hallucinated sources and stats and suggested alternative citations. And all of those citations came with real, live links, not 404 deadends. The biggest drawback to using Pablo was burning through my 100 free SerpAPI search calls. For future testing, I will try Claude's web search tool, which is available with newer models.

Round 6: The Writer Goes Rogue

Since performance improved with Sonnet, I decided to upgrade once again to Opus, the most advanced of the Claude models, which promised to offer a more human approach to writing. Once I updated the model in the code, I ran the workflow from the beginning. This time, I was careful to be a good human and answer Fred's questions in detail. I watched the screen, looking forward to what Tasha would write.

But this round Tasha went rogue. Rather than follow the brief, she re-conceptualized the piece as ChatGPT vs Claude: The AI Writing Assistant Showdown You Actually Need. And she was quick to throw shade on ChatGPT:

ChatGPT often sounds like it's trying to win a "Most Helpful AI" award. Everything is "crucial" or "essential." It loves to "delve into" topics and explore "myriad" options…ChatGPT will confidently tell you that the moon is made of cheese if you phrase your question the right (wrong) way.

The writing style was also a bit cringe and hyperactive:

"Let's cut to the chase: you're here because you need an AI writing assistant that actually works. Not one that spits out robotic garbage. Not one that makes you sound like a LinkedIn influencer having a stroke."

Rounds 7-10: Experiments with Memory

It occurred to me that the agents might perform better if they could remember the entire session, not just the previous interaction. So I set them up to log all their outputs and coversations in JSON format and referenced that log in each new prompt. But instead of improving the drafts, this approach made them worse. The agents began fixating more on their past interactions than on the creative brief.

The memory system might have worked better if I’d instructed the agents to treat the creative brief as their top priority and only refer to the interaction history when relevant. And it might have also made sense to summarize memories after they reached a certain length.

Bonus Round: Agents of Chaos

After sharing some of my early results on LinkedIn, I received a hilarious comment suggesting I give the agents a collection of personality problems. So I did exactly that:

While the agents' personality problems didn't dramatically impact the quality of the drafts, they did affect Fred's decision-making. For the first time out of more than 10 testing runs, Fred decided to approve the brief rather than ask clarifying questions:

"Brief is clear enough. Got all the basics covered - keyword, audience, tone, word count. Could use a drink myself after reading another AI content brief, but whatever pays the bills."

Annie continued to do her job, but her comments grew more savage, and less constructive, with every review cycle:

"The piece cuts off mid-sentence and is incomplete. Also, while the writer thinks they're being edgy and clever, they're actually just as verbose as the AI they're mocking. The irony is painful."

🧪 Can AI Agents Replace Humans?

Remember the LinkedIn post from the intro, which described a whole service organization run by agents? Well, researchers at Carnegie Mellon staffed a fake software company with AI agents from Google, OpenAI, Anthropic and Meta and allowed them to operate with no human supervision.

The agents' tasks were based on the day-to-day work at a software company, things like analyzing a database or compiling a performance review. And the agents struggled with virtually everything. Even the most successful agent (Claude), completed only 24 percent of its assigned tasks.

Read more about what happeed at the TheAgentCompany here and here. And remember it the next time you read an article about how human workers will be replaced by AI.

What Does It All Mean?

After learning a little about AI agents and running this experiment with a variety of Claude models, here are my key takeaways:

Security First

Don’t give AI agents access to personally identifiable information or transaction capabilities unless you’re working within a highly secure, controlled environment. The risks are real, and the potential liability is huge.

Focused Tasks Show Promise

Especially when given access to Google Search for fact checking, AI agents working together can produce decent (if not quite human) copy drafts and helpful frameworks for short blogs and outlines.

Long-Form Still a Struggle

Context window limitations and attention span issues make it difficult for agents to write copy that's more than around 1,000 words long in one shot. A section-by-section approach that bundles previously written copy with each prompt could address this limitation, but it would likely be costly.

Quality vs Cost

Writing quality generally improves with better, newer models. But not always. In the experiment, the best results came from Claude 4 Sonnet. Haiku, an older, lightweight model, was cheap but unreliable. Opus was creative but unpredictable.

Infinite Loops Are Real

Without clear stopping criteria, AI agents can get stuck in endless revision cycles, just like human teams sometimes do. Agents, like human employees, need clear direction from management and detailed written briefs.

Moving forward, I'm curious about how AI agents might work for something like nudging people to complete their content reviews, or handling simple project management tasks. But for now, I'll keep them away from my credit cards and my most important client work.

If you'd like to try this experiment yourself, you can grab the latest working code, which doesn't include the memory feature, from GitHub.